For a private project, I wanted to have a look at the German radar archive. I found that they have quasi-calibrated 5-minute precipitation data on a 1km2 grid available in the public domain. So, let’s download and process the data set, so that it is easier to use for further data analysis.

This post is part of the germanRADARanalysis project. All code is available on GitHub.

Download data

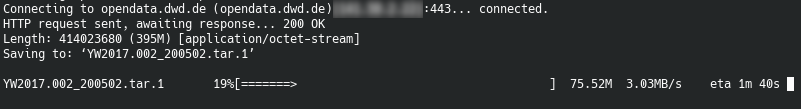

The RADKLIM DWD data archive contains now more than 20 years of 5-minute radar images. The data set is organized as monthly tar archives, of which each one again contains daily tar.gz archives. At the bottom of the data set are individual 5-minute binary files. The following script will download all tar files from 2001 to 2020 for the months of March to November. The wget command accepts a bandwidth limit. Here, I limit the download rate to 2MB/s.

#!/bin/bash

years=(2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020)

months=("04" "05" "06" "07" "08" "09")

for year in ${years[@]}

do

for month in ${months[@]}

do

wget --limit-rate 2048k https://opendata.dwd.de/climate_environment/CDC/grids_germany/5_minutes/radolan/reproc/2017_002/bin/${year}/YW2017.002_${year}${month}.tar

done

done

Code language: Bash (bash)Convert binary data and merge into daily NetCDF files

To convert the binary files to NetCDF, I use R with a couple of packages (see script header). The script expects that the tar files have been extracted into a directory called download/. The daily NetCDF files will be written into processed_bin/. The conversion runs in parallel on all available processor cores. Note that here I do not bother about the axis coordinates. For my purpose, I am fine with the generic RADKLIM grid. At this point, I want to highlight the dwdradar R package. It makes reading the DWD binary format in R a walk in the park. Have a look at the rdwd package, as well.

library("dwdradar")

library("raster")

library("ncdf4")

library("doFuture")

library("iterators")

# define environment

in.path <- "download/"

out.path <- "processed_bin/"

months <- sprintf("%02d", c(3, 10, 11))

years <- as.character(seq(2001, 2020))

days <- sprintf("%02d", seq(1, 31))

hours <- sprintf("%02d", seq(0, 23))

minutes <- sprintf("%02d", seq(0, 55, 5))

# define radklim grid

x.lowerleft <- c(-443.462)

y.lowerleft <- c(-4758.645)

nx <- 900

ny <- 1100

unit.coords <- "km"

x.coords <- seq(from = x.lowerleft,

by = 1,

length.out = nx)

y.coords <- seq(from = y.lowerleft,

by = 1,

length.out = ny)

# Set up the environment for parallel processing

registerDoFuture()

plan(multisession)

out.n <- foreach(year = iter(years)) %dopar% {

for (month in months) {

for (day in days) {

print("Processing:")

# construct tar.gz archive file name and check if it exists

tarfile <-

paste(in.path, "YW2017.002_", year, month, day, ".tar.gz", sep = "")

extract.dir <-

paste(in.path, "YW2017.002_", year, month, day, "/", sep = "")

print(tarfile)

if (!file.exists(tarfile)) {

print("File for day does not exist")

next

}

# create a daily data set

# open and define the nc file for writing

out.fname <-

paste(

out.path,

"/raa01-yw2017.002_10000-",

substr(year, 3, 4),

month,

day,

"-dwd---bin.nc",

sep = ""

)

# define the time index for this particular day

start <-

as.POSIXct(paste(year, month, day, " 00:00", sep = ""),

format = "%Y%m%d %H:%M",

tz = "UTC")

end <-

as.POSIXct(paste(year, month, day, " 23:55", sep = ""),

format = "%Y%m%d %H:%M",

tz = "UTC")

datetime <- as.numeric(seq(

from = start,

by = 300,

to = end

))

# Define some straightforward dimensions

x <- ncdim_def("x", "km", x.coords)

y <- ncdim_def("y", "km", y.coords)

t <-

ncdim_def(

"time",

"seconds since 1970-01-01",

datetime,

unlim = TRUE,

calendar = "gregorian"

)

# variable

new.var <-

ncvar_def(

"precipitation",

units = "mm/5min",

dim = list(x, y, t),

missval = -9.0,

prec = "float",

compression = 6

)

nc.out <- nc_create(out.fname, new.var)

# create temporary directory and extract data to it

system(paste("mkdir -p", extract.dir))

system(paste("tar xvf", tarfile, "-C", extract.dir))

# create the array that will hold all matrixes for this day

ts <- 1 # counter for time steps

# start reading 5 minute binary files and save them as NetCDF

for (hour in hours) {

for (minute in minutes) {

# read the binary file

in.bin <-

paste(

extract.dir,

"raa01-yw2017.002_10000-",

substr(year, 3, 4),

month,

day,

hour,

minute,

"-dwd---bin",

sep = ""

)

data.bin <- readRadarFile(in.bin, clutter = 0.0)

data.bin <- t(data.bin$dat)[, seq(ny, 1,-1)]

# write the whole day into the netCDF file and close it

ncvar_put(

nc.out,

"precipitation",

data.bin,

start = c(1, 1, ts),

count = c(nx, ny, 1)

)

ts <- ts + 1

}

}

nc_close(nc.out)

# remove after operations

system(paste("rm -rf", extract.dir))

}

}

}Code language: R (r)Concatenate daily to monthly NetCDF files

This task is rather easy and only requires the tool cdo. cdo will concatenate the daily files along the time axis to monthly files.

#!/bin/bash

set -ex

in_dir="./processed_bin/"

out_dir="./processed_final/"

for year in $(seq -f "%04g" 2001 2020)

do

for month in $(seq -f "%042g" 3 11)

do

# merge to monthly files

cdo -f nc4 -z zip_6 mergetime ${in_dir}/raa01-yw2017.002_10000-${year:2:2}${month}*-dwd---bin.nc ${out_dir}/raa01-yw2017.002_10000-${year}${month}-dwd---bin.nc

done

doneCode language: Bash (bash)Afterwards, the directory with the daily data files can be deleted.

Summary and further processing

There you have it. In a few steps we converted the somewhat cumbersome RADKLIM data set into a nicely structured set of NetCDF files. If you need the coordinates in geographic Lat/Lon format, I wrote a function that will do the job.

convertRADKLIMtoLatLon <- function(x = -443462, y = -4758645) {

# constants

lambda0 <- 10 * pi / 180

phi0 <- 60 * pi / 180

Rearth <- 6370040

# calculate coordinates

lon <- atan(-1 * x / y) + lambda0

lat <-

asin((Rearth ** 2 * (1 + sin(phi0)) ** 2 - (x ** 2 + y ** 2)) / (Rearth **

2 * (1 + sin(phi0)) ** 2 + (x ** 2 + y ** 2)))

# convert from radians to degree

lon <- lon * 180 / pi

lat <- lat * 180 / pi

if (!is.vector(x)) {

result <- c(lon, lat)

names(result) <- c("lon", "lat")

} else{

result <- data.frame(lon = lon, lat = lat)

}

return(result)

}Code language: R (r)Further reading

- RADKLIM data descripion

- Download location

- rdwd and dwdradar R packages